|

MOAB

4.9.3pre

|

|

MOAB

4.9.3pre

|

#include <SparseLUImpl.h>

Public Types | |

| typedef Matrix< Scalar, Dynamic, 1 > | ScalarVector |

| typedef Matrix< StorageIndex, Dynamic, 1 > | IndexVector |

| typedef Matrix< Scalar, Dynamic, Dynamic, ColMajor > | ScalarMatrix |

| typedef Map< ScalarMatrix, 0, OuterStride<> > | MappedMatrixBlock |

| typedef ScalarVector::RealScalar | RealScalar |

| typedef Ref< Matrix< Scalar, Dynamic, 1 > > | BlockScalarVector |

| typedef Ref< Matrix < StorageIndex, Dynamic, 1 > > | BlockIndexVector |

| typedef LU_GlobalLU_t < IndexVector, ScalarVector > | GlobalLU_t |

| typedef SparseMatrix< Scalar, ColMajor, StorageIndex > | MatrixType |

Protected Member Functions | |

| template<typename VectorType > | |

| Index | expand (VectorType &vec, Index &length, Index nbElts, Index keep_prev, Index &num_expansions) |

| Index | memInit (Index m, Index n, Index annz, Index lwork, Index fillratio, Index panel_size, GlobalLU_t &glu) |

| Allocate various working space for the numerical factorization phase. | |

| template<typename VectorType > | |

| Index | memXpand (VectorType &vec, Index &maxlen, Index nbElts, MemType memtype, Index &num_expansions) |

| Expand the existing storage. | |

| void | heap_relax_snode (const Index n, IndexVector &et, const Index relax_columns, IndexVector &descendants, IndexVector &relax_end) |

| Identify the initial relaxed supernodes. | |

| void | relax_snode (const Index n, IndexVector &et, const Index relax_columns, IndexVector &descendants, IndexVector &relax_end) |

| Identify the initial relaxed supernodes. | |

| Index | snode_dfs (const Index jcol, const Index kcol, const MatrixType &mat, IndexVector &xprune, IndexVector &marker, GlobalLU_t &glu) |

| Index | snode_bmod (const Index jcol, const Index fsupc, ScalarVector &dense, GlobalLU_t &glu) |

| Index | pivotL (const Index jcol, const RealScalar &diagpivotthresh, IndexVector &perm_r, IndexVector &iperm_c, Index &pivrow, GlobalLU_t &glu) |

| Performs the numerical pivotin on the current column of L, and the CDIV operation. | |

| template<typename Traits > | |

| void | dfs_kernel (const StorageIndex jj, IndexVector &perm_r, Index &nseg, IndexVector &panel_lsub, IndexVector &segrep, Ref< IndexVector > repfnz_col, IndexVector &xprune, Ref< IndexVector > marker, IndexVector &parent, IndexVector &xplore, GlobalLU_t &glu, Index &nextl_col, Index krow, Traits &traits) |

| void | panel_dfs (const Index m, const Index w, const Index jcol, MatrixType &A, IndexVector &perm_r, Index &nseg, ScalarVector &dense, IndexVector &panel_lsub, IndexVector &segrep, IndexVector &repfnz, IndexVector &xprune, IndexVector &marker, IndexVector &parent, IndexVector &xplore, GlobalLU_t &glu) |

| Performs a symbolic factorization on a panel of columns [jcol, jcol+w) | |

| void | panel_bmod (const Index m, const Index w, const Index jcol, const Index nseg, ScalarVector &dense, ScalarVector &tempv, IndexVector &segrep, IndexVector &repfnz, GlobalLU_t &glu) |

| Performs numeric block updates (sup-panel) in topological order. | |

| Index | column_dfs (const Index m, const Index jcol, IndexVector &perm_r, Index maxsuper, Index &nseg, BlockIndexVector lsub_col, IndexVector &segrep, BlockIndexVector repfnz, IndexVector &xprune, IndexVector &marker, IndexVector &parent, IndexVector &xplore, GlobalLU_t &glu) |

| Performs a symbolic factorization on column jcol and decide the supernode boundary. | |

| Index | column_bmod (const Index jcol, const Index nseg, BlockScalarVector dense, ScalarVector &tempv, BlockIndexVector segrep, BlockIndexVector repfnz, Index fpanelc, GlobalLU_t &glu) |

| Performs numeric block updates (sup-col) in topological order. | |

| Index | copy_to_ucol (const Index jcol, const Index nseg, IndexVector &segrep, BlockIndexVector repfnz, IndexVector &perm_r, BlockScalarVector dense, GlobalLU_t &glu) |

| Performs numeric block updates (sup-col) in topological order. | |

| void | pruneL (const Index jcol, const IndexVector &perm_r, const Index pivrow, const Index nseg, const IndexVector &segrep, BlockIndexVector repfnz, IndexVector &xprune, GlobalLU_t &glu) |

| Prunes the L-structure. | |

| void | countnz (const Index n, Index &nnzL, Index &nnzU, GlobalLU_t &glu) |

| Count Nonzero elements in the factors. | |

| void | fixupL (const Index n, const IndexVector &perm_r, GlobalLU_t &glu) |

| Fix up the data storage lsub for L-subscripts. | |

Friends | |

| struct | column_dfs_traits |

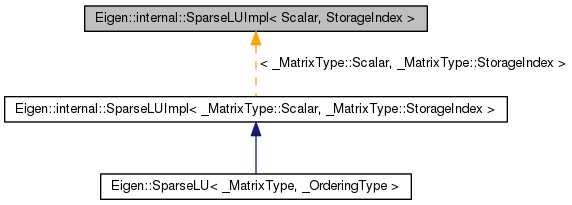

Base class for sparseLU

Definition at line 20 of file SparseLUImpl.h.

| typedef Ref<Matrix<StorageIndex,Dynamic,1> > Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::BlockIndexVector |

Definition at line 29 of file SparseLUImpl.h.

| typedef Ref<Matrix<Scalar,Dynamic,1> > Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::BlockScalarVector |

Definition at line 28 of file SparseLUImpl.h.

| typedef LU_GlobalLU_t<IndexVector, ScalarVector> Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::GlobalLU_t |

Definition at line 30 of file SparseLUImpl.h.

| typedef Matrix<StorageIndex,Dynamic,1> Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::IndexVector |

Reimplemented in Eigen::SparseLU< _MatrixType, _OrderingType >.

Definition at line 24 of file SparseLUImpl.h.

| typedef Map<ScalarMatrix, 0, OuterStride<> > Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::MappedMatrixBlock |

Definition at line 26 of file SparseLUImpl.h.

| typedef SparseMatrix<Scalar,ColMajor,StorageIndex> Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::MatrixType |

Reimplemented in Eigen::SparseLU< _MatrixType, _OrderingType >.

Definition at line 31 of file SparseLUImpl.h.

| typedef ScalarVector::RealScalar Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::RealScalar |

Reimplemented in Eigen::SparseLU< _MatrixType, _OrderingType >.

Definition at line 27 of file SparseLUImpl.h.

| typedef Matrix<Scalar,Dynamic,Dynamic,ColMajor> Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::ScalarMatrix |

Definition at line 25 of file SparseLUImpl.h.

| typedef Matrix<Scalar,Dynamic,1> Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::ScalarVector |

Reimplemented in Eigen::SparseLU< _MatrixType, _OrderingType >.

Definition at line 23 of file SparseLUImpl.h.

| Index Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::column_bmod | ( | const Index | jcol, |

| const Index | nseg, | ||

| BlockScalarVector | dense, | ||

| ScalarVector & | tempv, | ||

| BlockIndexVector | segrep, | ||

| BlockIndexVector | repfnz, | ||

| Index | fpanelc, | ||

| GlobalLU_t & | glu | ||

| ) | [protected] |

Performs numeric block updates (sup-col) in topological order.

| jcol | current column to update |

| nseg | Number of segments in the U part |

| dense | Store the full representation of the column |

| tempv | working array |

| segrep | segment representative ... |

| repfnz | ??? First nonzero column in each row ??? ... |

| fpanelc | First column in the current panel |

| glu | Global LU data. |

Definition at line 53 of file SparseLU_column_bmod.h.

{

Index jsupno, k, ksub, krep, ksupno;

Index lptr, nrow, isub, irow, nextlu, new_next, ufirst;

Index fsupc, nsupc, nsupr, luptr, kfnz, no_zeros;

/* krep = representative of current k-th supernode

* fsupc = first supernodal column

* nsupc = number of columns in a supernode

* nsupr = number of rows in a supernode

* luptr = location of supernodal LU-block in storage

* kfnz = first nonz in the k-th supernodal segment

* no_zeros = no lf leading zeros in a supernodal U-segment

*/

jsupno = glu.supno(jcol);

// For each nonzero supernode segment of U[*,j] in topological order

k = nseg - 1;

Index d_fsupc; // distance between the first column of the current panel and the

// first column of the current snode

Index fst_col; // First column within small LU update

Index segsize;

for (ksub = 0; ksub < nseg; ksub++)

{

krep = segrep(k); k--;

ksupno = glu.supno(krep);

if (jsupno != ksupno )

{

// outside the rectangular supernode

fsupc = glu.xsup(ksupno);

fst_col = (std::max)(fsupc, fpanelc);

// Distance from the current supernode to the current panel;

// d_fsupc = 0 if fsupc > fpanelc

d_fsupc = fst_col - fsupc;

luptr = glu.xlusup(fst_col) + d_fsupc;

lptr = glu.xlsub(fsupc) + d_fsupc;

kfnz = repfnz(krep);

kfnz = (std::max)(kfnz, fpanelc);

segsize = krep - kfnz + 1;

nsupc = krep - fst_col + 1;

nsupr = glu.xlsub(fsupc+1) - glu.xlsub(fsupc);

nrow = nsupr - d_fsupc - nsupc;

Index lda = glu.xlusup(fst_col+1) - glu.xlusup(fst_col);

// Perform a triangular solver and block update,

// then scatter the result of sup-col update to dense

no_zeros = kfnz - fst_col;

if(segsize==1)

LU_kernel_bmod<1>::run(segsize, dense, tempv, glu.lusup, luptr, lda, nrow, glu.lsub, lptr, no_zeros);

else

LU_kernel_bmod<Dynamic>::run(segsize, dense, tempv, glu.lusup, luptr, lda, nrow, glu.lsub, lptr, no_zeros);

} // end if jsupno

} // end for each segment

// Process the supernodal portion of L\U[*,j]

nextlu = glu.xlusup(jcol);

fsupc = glu.xsup(jsupno);

// copy the SPA dense into L\U[*,j]

Index mem;

new_next = nextlu + glu.xlsub(fsupc + 1) - glu.xlsub(fsupc);

Index offset = internal::first_multiple<Index>(new_next, internal::packet_traits<Scalar>::size) - new_next;

if(offset)

new_next += offset;

while (new_next > glu.nzlumax )

{

mem = memXpand<ScalarVector>(glu.lusup, glu.nzlumax, nextlu, LUSUP, glu.num_expansions);

if (mem) return mem;

}

for (isub = glu.xlsub(fsupc); isub < glu.xlsub(fsupc+1); isub++)

{

irow = glu.lsub(isub);

glu.lusup(nextlu) = dense(irow);

dense(irow) = Scalar(0.0);

++nextlu;

}

if(offset)

{

glu.lusup.segment(nextlu,offset).setZero();

nextlu += offset;

}

glu.xlusup(jcol + 1) = StorageIndex(nextlu); // close L\U(*,jcol);

/* For more updates within the panel (also within the current supernode),

* should start from the first column of the panel, or the first column

* of the supernode, whichever is bigger. There are two cases:

* 1) fsupc < fpanelc, then fst_col <-- fpanelc

* 2) fsupc >= fpanelc, then fst_col <-- fsupc

*/

fst_col = (std::max)(fsupc, fpanelc);

if (fst_col < jcol)

{

// Distance between the current supernode and the current panel

// d_fsupc = 0 if fsupc >= fpanelc

d_fsupc = fst_col - fsupc;

lptr = glu.xlsub(fsupc) + d_fsupc;

luptr = glu.xlusup(fst_col) + d_fsupc;

nsupr = glu.xlsub(fsupc+1) - glu.xlsub(fsupc); // leading dimension

nsupc = jcol - fst_col; // excluding jcol

nrow = nsupr - d_fsupc - nsupc;

// points to the beginning of jcol in snode L\U(jsupno)

ufirst = glu.xlusup(jcol) + d_fsupc;

Index lda = glu.xlusup(jcol+1) - glu.xlusup(jcol);

MappedMatrixBlock A( &(glu.lusup.data()[luptr]), nsupc, nsupc, OuterStride<>(lda) );

VectorBlock<ScalarVector> u(glu.lusup, ufirst, nsupc);

u = A.template triangularView<UnitLower>().solve(u);

new (&A) MappedMatrixBlock ( &(glu.lusup.data()[luptr+nsupc]), nrow, nsupc, OuterStride<>(lda) );

VectorBlock<ScalarVector> l(glu.lusup, ufirst+nsupc, nrow);

l.noalias() -= A * u;

} // End if fst_col

return 0;

}

| Index Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::column_dfs | ( | const Index | m, |

| const Index | jcol, | ||

| IndexVector & | perm_r, | ||

| Index | maxsuper, | ||

| Index & | nseg, | ||

| BlockIndexVector | lsub_col, | ||

| IndexVector & | segrep, | ||

| BlockIndexVector | repfnz, | ||

| IndexVector & | xprune, | ||

| IndexVector & | marker, | ||

| IndexVector & | parent, | ||

| IndexVector & | xplore, | ||

| GlobalLU_t & | glu | ||

| ) | [protected] |

Performs a symbolic factorization on column jcol and decide the supernode boundary.

A supernode representative is the last column of a supernode. The nonzeros in U[*,j] are segments that end at supernodes representatives. The routine returns a list of the supernodal representatives in topological order of the dfs that generates them. The location of the first nonzero in each supernodal segment (supernodal entry location) is also returned.

| m | number of rows in the matrix | |

| jcol | Current column | |

| perm_r | Row permutation | |

| maxsuper | Maximum number of column allowed in a supernode | |

| [in,out] | nseg | Number of segments in current U[*,j] - new segments appended |

| lsub_col | defines the rhs vector to start the dfs | |

| [in,out] | segrep | Segment representatives - new segments appended |

| repfnz | First nonzero location in each row | |

| xprune | ||

| marker | marker[i] == jj, if i was visited during dfs of current column jj; | |

| parent | ||

| xplore | working array | |

| glu | global LU data |

Definition at line 93 of file SparseLU_column_dfs.h.

{

Index jsuper = glu.supno(jcol);

Index nextl = glu.xlsub(jcol);

VectorBlock<IndexVector> marker2(marker, 2*m, m);

column_dfs_traits<IndexVector, ScalarVector> traits(jcol, jsuper, glu, *this);

// For each nonzero in A(*,jcol) do dfs

for (Index k = 0; ((k < m) ? lsub_col[k] != emptyIdxLU : false) ; k++)

{

Index krow = lsub_col(k);

lsub_col(k) = emptyIdxLU;

Index kmark = marker2(krow);

// krow was visited before, go to the next nonz;

if (kmark == jcol) continue;

dfs_kernel(StorageIndex(jcol), perm_r, nseg, glu.lsub, segrep, repfnz, xprune, marker2, parent,

xplore, glu, nextl, krow, traits);

} // for each nonzero ...

Index fsupc;

StorageIndex nsuper = glu.supno(jcol);

StorageIndex jcolp1 = StorageIndex(jcol) + 1;

Index jcolm1 = jcol - 1;

// check to see if j belongs in the same supernode as j-1

if ( jcol == 0 )

{ // Do nothing for column 0

nsuper = glu.supno(0) = 0 ;

}

else

{

fsupc = glu.xsup(nsuper);

StorageIndex jptr = glu.xlsub(jcol); // Not yet compressed

StorageIndex jm1ptr = glu.xlsub(jcolm1);

// Use supernodes of type T2 : see SuperLU paper

if ( (nextl-jptr != jptr-jm1ptr-1) ) jsuper = emptyIdxLU;

// Make sure the number of columns in a supernode doesn't

// exceed threshold

if ( (jcol - fsupc) >= maxsuper) jsuper = emptyIdxLU;

/* If jcol starts a new supernode, reclaim storage space in

* glu.lsub from previous supernode. Note we only store

* the subscript set of the first and last columns of

* a supernode. (first for num values, last for pruning)

*/

if (jsuper == emptyIdxLU)

{ // starts a new supernode

if ( (fsupc < jcolm1-1) )

{ // >= 3 columns in nsuper

StorageIndex ito = glu.xlsub(fsupc+1);

glu.xlsub(jcolm1) = ito;

StorageIndex istop = ito + jptr - jm1ptr;

xprune(jcolm1) = istop; // intialize xprune(jcol-1)

glu.xlsub(jcol) = istop;

for (StorageIndex ifrom = jm1ptr; ifrom < nextl; ++ifrom, ++ito)

glu.lsub(ito) = glu.lsub(ifrom);

nextl = ito; // = istop + length(jcol)

}

nsuper++;

glu.supno(jcol) = nsuper;

} // if a new supernode

} // end else: jcol > 0

// Tidy up the pointers before exit

glu.xsup(nsuper+1) = jcolp1;

glu.supno(jcolp1) = nsuper;

xprune(jcol) = StorageIndex(nextl); // Intialize upper bound for pruning

glu.xlsub(jcolp1) = StorageIndex(nextl);

return 0;

}

| Index Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::copy_to_ucol | ( | const Index | jcol, |

| const Index | nseg, | ||

| IndexVector & | segrep, | ||

| BlockIndexVector | repfnz, | ||

| IndexVector & | perm_r, | ||

| BlockScalarVector | dense, | ||

| GlobalLU_t & | glu | ||

| ) | [protected] |

Performs numeric block updates (sup-col) in topological order.

| jcol | current column to update |

| nseg | Number of segments in the U part |

| segrep | segment representative ... |

| repfnz | First nonzero column in each row ... |

| perm_r | Row permutation |

| dense | Store the full representation of the column |

| glu | Global LU data. |

Definition at line 50 of file SparseLU_copy_to_ucol.h.

{

Index ksub, krep, ksupno;

Index jsupno = glu.supno(jcol);

// For each nonzero supernode segment of U[*,j] in topological order

Index k = nseg - 1, i;

StorageIndex nextu = glu.xusub(jcol);

Index kfnz, isub, segsize;

Index new_next,irow;

Index fsupc, mem;

for (ksub = 0; ksub < nseg; ksub++)

{

krep = segrep(k); k--;

ksupno = glu.supno(krep);

if (jsupno != ksupno ) // should go into ucol();

{

kfnz = repfnz(krep);

if (kfnz != emptyIdxLU)

{ // Nonzero U-segment

fsupc = glu.xsup(ksupno);

isub = glu.xlsub(fsupc) + kfnz - fsupc;

segsize = krep - kfnz + 1;

new_next = nextu + segsize;

while (new_next > glu.nzumax)

{

mem = memXpand<ScalarVector>(glu.ucol, glu.nzumax, nextu, UCOL, glu.num_expansions);

if (mem) return mem;

mem = memXpand<IndexVector>(glu.usub, glu.nzumax, nextu, USUB, glu.num_expansions);

if (mem) return mem;

}

for (i = 0; i < segsize; i++)

{

irow = glu.lsub(isub);

glu.usub(nextu) = perm_r(irow); // Unlike the L part, the U part is stored in its final order

glu.ucol(nextu) = dense(irow);

dense(irow) = Scalar(0.0);

nextu++;

isub++;

}

} // end nonzero U-segment

} // end if jsupno

} // end for each segment

glu.xusub(jcol + 1) = nextu; // close U(*,jcol)

return 0;

}

| void Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::countnz | ( | const Index | n, |

| Index & | nnzL, | ||

| Index & | nnzU, | ||

| GlobalLU_t & | glu | ||

| ) | [protected] |

Count Nonzero elements in the factors.

Definition at line 21 of file SparseLU_Utils.h.

{

nnzL = 0;

nnzU = (glu.xusub)(n);

Index nsuper = (glu.supno)(n);

Index jlen;

Index i, j, fsupc;

if (n <= 0 ) return;

// For each supernode

for (i = 0; i <= nsuper; i++)

{

fsupc = glu.xsup(i);

jlen = glu.xlsub(fsupc+1) - glu.xlsub(fsupc);

for (j = fsupc; j < glu.xsup(i+1); j++)

{

nnzL += jlen;

nnzU += j - fsupc + 1;

jlen--;

}

}

}

| void Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::dfs_kernel | ( | const StorageIndex | jj, |

| IndexVector & | perm_r, | ||

| Index & | nseg, | ||

| IndexVector & | panel_lsub, | ||

| IndexVector & | segrep, | ||

| Ref< IndexVector > | repfnz_col, | ||

| IndexVector & | xprune, | ||

| Ref< IndexVector > | marker, | ||

| IndexVector & | parent, | ||

| IndexVector & | xplore, | ||

| GlobalLU_t & | glu, | ||

| Index & | nextl_col, | ||

| Index | krow, | ||

| Traits & | traits | ||

| ) | [protected] |

Definition at line 62 of file SparseLU_panel_dfs.h.

{

StorageIndex kmark = marker(krow);

// For each unmarked krow of jj

marker(krow) = jj;

StorageIndex kperm = perm_r(krow);

if (kperm == emptyIdxLU ) {

// krow is in L : place it in structure of L(*, jj)

panel_lsub(nextl_col++) = StorageIndex(krow); // krow is indexed into A

traits.mem_expand(panel_lsub, nextl_col, kmark);

}

else

{

// krow is in U : if its supernode-representative krep

// has been explored, update repfnz(*)

// krep = supernode representative of the current row

StorageIndex krep = glu.xsup(glu.supno(kperm)+1) - 1;

// First nonzero element in the current column:

StorageIndex myfnz = repfnz_col(krep);

if (myfnz != emptyIdxLU )

{

// Representative visited before

if (myfnz > kperm ) repfnz_col(krep) = kperm;

}

else

{

// Otherwise, perform dfs starting at krep

StorageIndex oldrep = emptyIdxLU;

parent(krep) = oldrep;

repfnz_col(krep) = kperm;

StorageIndex xdfs = glu.xlsub(krep);

Index maxdfs = xprune(krep);

StorageIndex kpar;

do

{

// For each unmarked kchild of krep

while (xdfs < maxdfs)

{

StorageIndex kchild = glu.lsub(xdfs);

xdfs++;

StorageIndex chmark = marker(kchild);

if (chmark != jj )

{

marker(kchild) = jj;

StorageIndex chperm = perm_r(kchild);

if (chperm == emptyIdxLU)

{

// case kchild is in L: place it in L(*, j)

panel_lsub(nextl_col++) = kchild;

traits.mem_expand(panel_lsub, nextl_col, chmark);

}

else

{

// case kchild is in U :

// chrep = its supernode-rep. If its rep has been explored,

// update its repfnz(*)

StorageIndex chrep = glu.xsup(glu.supno(chperm)+1) - 1;

myfnz = repfnz_col(chrep);

if (myfnz != emptyIdxLU)

{ // Visited before

if (myfnz > chperm)

repfnz_col(chrep) = chperm;

}

else

{ // Cont. dfs at snode-rep of kchild

xplore(krep) = xdfs;

oldrep = krep;

krep = chrep; // Go deeper down G(L)

parent(krep) = oldrep;

repfnz_col(krep) = chperm;

xdfs = glu.xlsub(krep);

maxdfs = xprune(krep);

} // end if myfnz != -1

} // end if chperm == -1

} // end if chmark !=jj

} // end while xdfs < maxdfs

// krow has no more unexplored nbrs :

// Place snode-rep krep in postorder DFS, if this

// segment is seen for the first time. (Note that

// "repfnz(krep)" may change later.)

// Baktrack dfs to its parent

if(traits.update_segrep(krep,jj))

//if (marker1(krep) < jcol )

{

segrep(nseg) = krep;

++nseg;

//marker1(krep) = jj;

}

kpar = parent(krep); // Pop recursion, mimic recursion

if (kpar == emptyIdxLU)

break; // dfs done

krep = kpar;

xdfs = xplore(krep);

maxdfs = xprune(krep);

} while (kpar != emptyIdxLU); // Do until empty stack

} // end if (myfnz = -1)

} // end if (kperm == -1)

}

| Index Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::expand | ( | VectorType & | vec, |

| Index & | length, | ||

| Index | nbElts, | ||

| Index | keep_prev, | ||

| Index & | num_expansions | ||

| ) | [protected] |

Expand the existing storage to accomodate more fill-ins

| vec | Valid pointer to the vector to allocate or expand | |

| [in,out] | length | At input, contain the current length of the vector that is to be increased. At output, length of the newly allocated vector |

| [in] | nbElts | Current number of elements in the factors |

| keep_prev | 1: use length and do not expand the vector; 0: compute new_len and expand | |

| [in,out] | num_expansions | Number of times the memory has been expanded |

Definition at line 63 of file SparseLU_Memory.h.

{

float alpha = 1.5; // Ratio of the memory increase

Index new_len; // New size of the allocated memory

if(num_expansions == 0 || keep_prev)

new_len = length ; // First time allocate requested

else

new_len = (std::max)(length+1,Index(alpha * length));

VectorType old_vec; // Temporary vector to hold the previous values

if (nbElts > 0 )

old_vec = vec.segment(0,nbElts);

//Allocate or expand the current vector

#ifdef EIGEN_EXCEPTIONS

try

#endif

{

vec.resize(new_len);

}

#ifdef EIGEN_EXCEPTIONS

catch(std::bad_alloc& )

#else

if(!vec.size())

#endif

{

if (!num_expansions)

{

// First time to allocate from LUMemInit()

// Let LUMemInit() deals with it.

return -1;

}

if (keep_prev)

{

// In this case, the memory length should not not be reduced

return new_len;

}

else

{

// Reduce the size and increase again

Index tries = 0; // Number of attempts

do

{

alpha = (alpha + 1)/2;

new_len = (std::max)(length+1,Index(alpha * length));

#ifdef EIGEN_EXCEPTIONS

try

#endif

{

vec.resize(new_len);

}

#ifdef EIGEN_EXCEPTIONS

catch(std::bad_alloc& )

#else

if (!vec.size())

#endif

{

tries += 1;

if ( tries > 10) return new_len;

}

} while (!vec.size());

}

}

//Copy the previous values to the newly allocated space

if (nbElts > 0)

vec.segment(0, nbElts) = old_vec;

length = new_len;

if(num_expansions) ++num_expansions;

return 0;

}

| void Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::fixupL | ( | const Index | n, |

| const IndexVector & | perm_r, | ||

| GlobalLU_t & | glu | ||

| ) | [protected] |

Fix up the data storage lsub for L-subscripts.

It removes the subscripts sets for structural pruning, and applies permutation to the remaining subscripts

Definition at line 52 of file SparseLU_Utils.h.

{

Index fsupc, i, j, k, jstart;

StorageIndex nextl = 0;

Index nsuper = (glu.supno)(n);

// For each supernode

for (i = 0; i <= nsuper; i++)

{

fsupc = glu.xsup(i);

jstart = glu.xlsub(fsupc);

glu.xlsub(fsupc) = nextl;

for (j = jstart; j < glu.xlsub(fsupc + 1); j++)

{

glu.lsub(nextl) = perm_r(glu.lsub(j)); // Now indexed into P*A

nextl++;

}

for (k = fsupc+1; k < glu.xsup(i+1); k++)

glu.xlsub(k) = nextl; // other columns in supernode i

}

glu.xlsub(n) = nextl;

}

| void Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::heap_relax_snode | ( | const Index | n, |

| IndexVector & | et, | ||

| const Index | relax_columns, | ||

| IndexVector & | descendants, | ||

| IndexVector & | relax_end | ||

| ) | [protected] |

Identify the initial relaxed supernodes.

This routine applied to a symmetric elimination tree. It assumes that the matrix has been reordered according to the postorder of the etree

| n | The number of columns |

| et | elimination tree |

| relax_columns | Maximum number of columns allowed in a relaxed snode |

| descendants | Number of descendants of each node in the etree |

| relax_end | last column in a supernode |

Definition at line 46 of file SparseLU_heap_relax_snode.h.

{

// The etree may not be postordered, but its heap ordered

IndexVector post;

internal::treePostorder(StorageIndex(n), et, post); // Post order etree

IndexVector inv_post(n+1);

for (StorageIndex i = 0; i < n+1; ++i) inv_post(post(i)) = i; // inv_post = post.inverse()???

// Renumber etree in postorder

IndexVector iwork(n);

IndexVector et_save(n+1);

for (Index i = 0; i < n; ++i)

{

iwork(post(i)) = post(et(i));

}

et_save = et; // Save the original etree

et = iwork;

// compute the number of descendants of each node in the etree

relax_end.setConstant(emptyIdxLU);

Index j, parent;

descendants.setZero();

for (j = 0; j < n; j++)

{

parent = et(j);

if (parent != n) // not the dummy root

descendants(parent) += descendants(j) + 1;

}

// Identify the relaxed supernodes by postorder traversal of the etree

Index snode_start; // beginning of a snode

StorageIndex k;

Index nsuper_et_post = 0; // Number of relaxed snodes in postordered etree

Index nsuper_et = 0; // Number of relaxed snodes in the original etree

StorageIndex l;

for (j = 0; j < n; )

{

parent = et(j);

snode_start = j;

while ( parent != n && descendants(parent) < relax_columns )

{

j = parent;

parent = et(j);

}

// Found a supernode in postordered etree, j is the last column

++nsuper_et_post;

k = StorageIndex(n);

for (Index i = snode_start; i <= j; ++i)

k = (std::min)(k, inv_post(i));

l = inv_post(j);

if ( (l - k) == (j - snode_start) ) // Same number of columns in the snode

{

// This is also a supernode in the original etree

relax_end(k) = l; // Record last column

++nsuper_et;

}

else

{

for (Index i = snode_start; i <= j; ++i)

{

l = inv_post(i);

if (descendants(i) == 0)

{

relax_end(l) = l;

++nsuper_et;

}

}

}

j++;

// Search for a new leaf

while (descendants(j) != 0 && j < n) j++;

} // End postorder traversal of the etree

// Recover the original etree

et = et_save;

}

| Index Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::memInit | ( | Index | m, |

| Index | n, | ||

| Index | annz, | ||

| Index | lwork, | ||

| Index | fillratio, | ||

| Index | panel_size, | ||

| GlobalLU_t & | glu | ||

| ) | [protected] |

Allocate various working space for the numerical factorization phase.

| m | number of rows of the input matrix |

| n | number of columns |

| annz | number of initial nonzeros in the matrix |

| lwork | if lwork=-1, this routine returns an estimated size of the required memory |

| glu | persistent data to facilitate multiple factors : will be deleted later ?? |

| fillratio | estimated ratio of fill in the factors |

| panel_size | Size of a panel |

Definition at line 151 of file SparseLU_Memory.h.

{

Index& num_expansions = glu.num_expansions; //No memory expansions so far

num_expansions = 0;

glu.nzumax = glu.nzlumax = (std::min)(fillratio * (annz+1) / n, m) * n; // estimated number of nonzeros in U

glu.nzlmax = (std::max)(Index(4), fillratio) * (annz+1) / 4; // estimated nnz in L factor

// Return the estimated size to the user if necessary

Index tempSpace;

tempSpace = (2*panel_size + 4 + LUNoMarker) * m * sizeof(Index) + (panel_size + 1) * m * sizeof(Scalar);

if (lwork == emptyIdxLU)

{

Index estimated_size;

estimated_size = (5 * n + 5) * sizeof(Index) + tempSpace

+ (glu.nzlmax + glu.nzumax) * sizeof(Index) + (glu.nzlumax+glu.nzumax) * sizeof(Scalar) + n;

return estimated_size;

}

// Setup the required space

// First allocate Integer pointers for L\U factors

glu.xsup.resize(n+1);

glu.supno.resize(n+1);

glu.xlsub.resize(n+1);

glu.xlusup.resize(n+1);

glu.xusub.resize(n+1);

// Reserve memory for L/U factors

do

{

if( (expand<ScalarVector>(glu.lusup, glu.nzlumax, 0, 0, num_expansions)<0)

|| (expand<ScalarVector>(glu.ucol, glu.nzumax, 0, 0, num_expansions)<0)

|| (expand<IndexVector> (glu.lsub, glu.nzlmax, 0, 0, num_expansions)<0)

|| (expand<IndexVector> (glu.usub, glu.nzumax, 0, 1, num_expansions)<0) )

{

//Reduce the estimated size and retry

glu.nzlumax /= 2;

glu.nzumax /= 2;

glu.nzlmax /= 2;

if (glu.nzlumax < annz ) return glu.nzlumax;

}

} while (!glu.lusup.size() || !glu.ucol.size() || !glu.lsub.size() || !glu.usub.size());

++num_expansions;

return 0;

} // end LuMemInit

| Index Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::memXpand | ( | VectorType & | vec, |

| Index & | maxlen, | ||

| Index | nbElts, | ||

| MemType | memtype, | ||

| Index & | num_expansions | ||

| ) | [protected] |

Expand the existing storage.

| vec | vector to expand | |

| [in,out] | maxlen | On input, previous size of vec (Number of elements to copy ). on output, new size |

| nbElts | current number of elements in the vector. | |

| memtype | Type of the element to expand | |

| num_expansions | Number of expansions |

Definition at line 209 of file SparseLU_Memory.h.

| void Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::panel_bmod | ( | const Index | m, |

| const Index | w, | ||

| const Index | jcol, | ||

| const Index | nseg, | ||

| ScalarVector & | dense, | ||

| ScalarVector & | tempv, | ||

| IndexVector & | segrep, | ||

| IndexVector & | repfnz, | ||

| GlobalLU_t & | glu | ||

| ) | [protected] |

Performs numeric block updates (sup-panel) in topological order.

Before entering this routine, the original nonzeros in the panel were already copied i nto the spa[m,w]

| m | number of rows in the matrix |

| w | Panel size |

| jcol | Starting column of the panel |

| nseg | Number of segments in the U part |

| dense | Store the full representation of the panel |

| tempv | working array |

| segrep | segment representative... first row in the segment |

| repfnz | First nonzero rows |

| glu | Global LU data. |

Definition at line 56 of file SparseLU_panel_bmod.h.

{

Index ksub,jj,nextl_col;

Index fsupc, nsupc, nsupr, nrow;

Index krep, kfnz;

Index lptr; // points to the row subscripts of a supernode

Index luptr; // ...

Index segsize,no_zeros ;

// For each nonz supernode segment of U[*,j] in topological order

Index k = nseg - 1;

const Index PacketSize = internal::packet_traits<Scalar>::size;

for (ksub = 0; ksub < nseg; ksub++)

{ // For each updating supernode

/* krep = representative of current k-th supernode

* fsupc = first supernodal column

* nsupc = number of columns in a supernode

* nsupr = number of rows in a supernode

*/

krep = segrep(k); k--;

fsupc = glu.xsup(glu.supno(krep));

nsupc = krep - fsupc + 1;

nsupr = glu.xlsub(fsupc+1) - glu.xlsub(fsupc);

nrow = nsupr - nsupc;

lptr = glu.xlsub(fsupc);

// loop over the panel columns to detect the actual number of columns and rows

Index u_rows = 0;

Index u_cols = 0;

for (jj = jcol; jj < jcol + w; jj++)

{

nextl_col = (jj-jcol) * m;

VectorBlock<IndexVector> repfnz_col(repfnz, nextl_col, m); // First nonzero column index for each row

kfnz = repfnz_col(krep);

if ( kfnz == emptyIdxLU )

continue; // skip any zero segment

segsize = krep - kfnz + 1;

u_cols++;

u_rows = (std::max)(segsize,u_rows);

}

if(nsupc >= 2)

{

Index ldu = internal::first_multiple<Index>(u_rows, PacketSize);

Map<ScalarMatrix, Aligned, OuterStride<> > U(tempv.data(), u_rows, u_cols, OuterStride<>(ldu));

// gather U

Index u_col = 0;

for (jj = jcol; jj < jcol + w; jj++)

{

nextl_col = (jj-jcol) * m;

VectorBlock<IndexVector> repfnz_col(repfnz, nextl_col, m); // First nonzero column index for each row

VectorBlock<ScalarVector> dense_col(dense, nextl_col, m); // Scatter/gather entire matrix column from/to here

kfnz = repfnz_col(krep);

if ( kfnz == emptyIdxLU )

continue; // skip any zero segment

segsize = krep - kfnz + 1;

luptr = glu.xlusup(fsupc);

no_zeros = kfnz - fsupc;

Index isub = lptr + no_zeros;

Index off = u_rows-segsize;

for (Index i = 0; i < off; i++) U(i,u_col) = 0;

for (Index i = 0; i < segsize; i++)

{

Index irow = glu.lsub(isub);

U(i+off,u_col) = dense_col(irow);

++isub;

}

u_col++;

}

// solve U = A^-1 U

luptr = glu.xlusup(fsupc);

Index lda = glu.xlusup(fsupc+1) - glu.xlusup(fsupc);

no_zeros = (krep - u_rows + 1) - fsupc;

luptr += lda * no_zeros + no_zeros;

MappedMatrixBlock A(glu.lusup.data()+luptr, u_rows, u_rows, OuterStride<>(lda) );

U = A.template triangularView<UnitLower>().solve(U);

// update

luptr += u_rows;

MappedMatrixBlock B(glu.lusup.data()+luptr, nrow, u_rows, OuterStride<>(lda) );

eigen_assert(tempv.size()>w*ldu + nrow*w + 1);

Index ldl = internal::first_multiple<Index>(nrow, PacketSize);

Index offset = (PacketSize-internal::first_default_aligned(B.data(), PacketSize)) % PacketSize;

MappedMatrixBlock L(tempv.data()+w*ldu+offset, nrow, u_cols, OuterStride<>(ldl));

L.setZero();

internal::sparselu_gemm<Scalar>(L.rows(), L.cols(), B.cols(), B.data(), B.outerStride(), U.data(), U.outerStride(), L.data(), L.outerStride());

// scatter U and L

u_col = 0;

for (jj = jcol; jj < jcol + w; jj++)

{

nextl_col = (jj-jcol) * m;

VectorBlock<IndexVector> repfnz_col(repfnz, nextl_col, m); // First nonzero column index for each row

VectorBlock<ScalarVector> dense_col(dense, nextl_col, m); // Scatter/gather entire matrix column from/to here

kfnz = repfnz_col(krep);

if ( kfnz == emptyIdxLU )

continue; // skip any zero segment

segsize = krep - kfnz + 1;

no_zeros = kfnz - fsupc;

Index isub = lptr + no_zeros;

Index off = u_rows-segsize;

for (Index i = 0; i < segsize; i++)

{

Index irow = glu.lsub(isub++);

dense_col(irow) = U.coeff(i+off,u_col);

U.coeffRef(i+off,u_col) = 0;

}

// Scatter l into SPA dense[]

for (Index i = 0; i < nrow; i++)

{

Index irow = glu.lsub(isub++);

dense_col(irow) -= L.coeff(i,u_col);

L.coeffRef(i,u_col) = 0;

}

u_col++;

}

}

else // level 2 only

{

// Sequence through each column in the panel

for (jj = jcol; jj < jcol + w; jj++)

{

nextl_col = (jj-jcol) * m;

VectorBlock<IndexVector> repfnz_col(repfnz, nextl_col, m); // First nonzero column index for each row

VectorBlock<ScalarVector> dense_col(dense, nextl_col, m); // Scatter/gather entire matrix column from/to here

kfnz = repfnz_col(krep);

if ( kfnz == emptyIdxLU )

continue; // skip any zero segment

segsize = krep - kfnz + 1;

luptr = glu.xlusup(fsupc);

Index lda = glu.xlusup(fsupc+1)-glu.xlusup(fsupc);// nsupr

// Perform a trianglar solve and block update,

// then scatter the result of sup-col update to dense[]

no_zeros = kfnz - fsupc;

if(segsize==1) LU_kernel_bmod<1>::run(segsize, dense_col, tempv, glu.lusup, luptr, lda, nrow, glu.lsub, lptr, no_zeros);

else if(segsize==2) LU_kernel_bmod<2>::run(segsize, dense_col, tempv, glu.lusup, luptr, lda, nrow, glu.lsub, lptr, no_zeros);

else if(segsize==3) LU_kernel_bmod<3>::run(segsize, dense_col, tempv, glu.lusup, luptr, lda, nrow, glu.lsub, lptr, no_zeros);

else LU_kernel_bmod<Dynamic>::run(segsize, dense_col, tempv, glu.lusup, luptr, lda, nrow, glu.lsub, lptr, no_zeros);

} // End for each column in the panel

}

} // End for each updating supernode

} // end panel bmod

| void Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::panel_dfs | ( | const Index | m, |

| const Index | w, | ||

| const Index | jcol, | ||

| MatrixType & | A, | ||

| IndexVector & | perm_r, | ||

| Index & | nseg, | ||

| ScalarVector & | dense, | ||

| IndexVector & | panel_lsub, | ||

| IndexVector & | segrep, | ||

| IndexVector & | repfnz, | ||

| IndexVector & | xprune, | ||

| IndexVector & | marker, | ||

| IndexVector & | parent, | ||

| IndexVector & | xplore, | ||

| GlobalLU_t & | glu | ||

| ) | [protected] |

Performs a symbolic factorization on a panel of columns [jcol, jcol+w)

A supernode representative is the last column of a supernode. The nonzeros in U[*,j] are segments that end at supernodes representatives

The routine returns a list of the supernodal representatives in topological order of the dfs that generates them. This list is a superset of the topological order of each individual column within the panel. The location of the first nonzero in each supernodal segment (supernodal entry location) is also returned. Each column has a separate list for this purpose.

Two markers arrays are used for dfs : marker[i] == jj, if i was visited during dfs of current column jj; marker1[i] >= jcol, if i was visited by earlier columns in this panel;

| [in] | m | number of rows in the matrix |

| [in] | w | Panel size |

| [in] | jcol | Starting column of the panel |

| [in] | A | Input matrix in column-major storage |

| [in] | perm_r | Row permutation |

| [out] | nseg | Number of U segments |

| [out] | dense | Accumulate the column vectors of the panel |

| [out] | panel_lsub | Subscripts of the row in the panel |

| [out] | segrep | Segment representative i.e first nonzero row of each segment |

| [out] | repfnz | First nonzero location in each row |

| [out] | xprune | The pruned elimination tree |

| [out] | marker | work vector |

| parent | The elimination tree | |

| xplore | work vector | |

| glu | The global data structure |

Definition at line 219 of file SparseLU_panel_dfs.h.

{

Index nextl_col; // Next available position in panel_lsub[*,jj]

// Initialize pointers

VectorBlock<IndexVector> marker1(marker, m, m);

nseg = 0;

panel_dfs_traits<IndexVector> traits(jcol, marker1.data());

// For each column in the panel

for (StorageIndex jj = StorageIndex(jcol); jj < jcol + w; jj++)

{

nextl_col = (jj - jcol) * m;

VectorBlock<IndexVector> repfnz_col(repfnz, nextl_col, m); // First nonzero location in each row

VectorBlock<ScalarVector> dense_col(dense,nextl_col, m); // Accumulate a column vector here

// For each nnz in A[*, jj] do depth first search

for (typename MatrixType::InnerIterator it(A, jj); it; ++it)

{

Index krow = it.row();

dense_col(krow) = it.value();

StorageIndex kmark = marker(krow);

if (kmark == jj)

continue; // krow visited before, go to the next nonzero

dfs_kernel(jj, perm_r, nseg, panel_lsub, segrep, repfnz_col, xprune, marker, parent,

xplore, glu, nextl_col, krow, traits);

}// end for nonzeros in column jj

} // end for column jj

}

| Index Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::pivotL | ( | const Index | jcol, |

| const RealScalar & | diagpivotthresh, | ||

| IndexVector & | perm_r, | ||

| IndexVector & | iperm_c, | ||

| Index & | pivrow, | ||

| GlobalLU_t & | glu | ||

| ) | [protected] |

Performs the numerical pivotin on the current column of L, and the CDIV operation.

Pivot policy : (1) Compute thresh = u * max_(i>=j) abs(A_ij); (2) IF user specifies pivot row k and abs(A_kj) >= thresh THEN pivot row = k; ELSE IF abs(A_jj) >= thresh THEN pivot row = j; ELSE pivot row = m;

Note: If you absolutely want to use a given pivot order, then set u=0.0.

| jcol | The current column of L | |

| diagpivotthresh | diagonal pivoting threshold | |

| [in,out] | perm_r | Row permutation (threshold pivoting) |

| [in] | iperm_c | column permutation - used to finf diagonal of Pc*A*Pc' |

| [out] | pivrow | The pivot row |

| glu | Global LU data |

Definition at line 60 of file SparseLU_pivotL.h.

{

Index fsupc = (glu.xsup)((glu.supno)(jcol)); // First column in the supernode containing the column jcol

Index nsupc = jcol - fsupc; // Number of columns in the supernode portion, excluding jcol; nsupc >=0

Index lptr = glu.xlsub(fsupc); // pointer to the starting location of the row subscripts for this supernode portion

Index nsupr = glu.xlsub(fsupc+1) - lptr; // Number of rows in the supernode

Index lda = glu.xlusup(fsupc+1) - glu.xlusup(fsupc); // leading dimension

Scalar* lu_sup_ptr = &(glu.lusup.data()[glu.xlusup(fsupc)]); // Start of the current supernode

Scalar* lu_col_ptr = &(glu.lusup.data()[glu.xlusup(jcol)]); // Start of jcol in the supernode

StorageIndex* lsub_ptr = &(glu.lsub.data()[lptr]); // Start of row indices of the supernode

// Determine the largest abs numerical value for partial pivoting

Index diagind = iperm_c(jcol); // diagonal index

RealScalar pivmax(-1.0);

Index pivptr = nsupc;

Index diag = emptyIdxLU;

RealScalar rtemp;

Index isub, icol, itemp, k;

for (isub = nsupc; isub < nsupr; ++isub) {

using std::abs;

rtemp = abs(lu_col_ptr[isub]);

if (rtemp > pivmax) {

pivmax = rtemp;

pivptr = isub;

}

if (lsub_ptr[isub] == diagind) diag = isub;

}

// Test for singularity

if ( pivmax <= RealScalar(0.0) ) {

// if pivmax == -1, the column is structurally empty, otherwise it is only numerically zero

pivrow = pivmax < RealScalar(0.0) ? diagind : lsub_ptr[pivptr];

perm_r(pivrow) = StorageIndex(jcol);

return (jcol+1);

}

RealScalar thresh = diagpivotthresh * pivmax;

// Choose appropriate pivotal element

{

// Test if the diagonal element can be used as a pivot (given the threshold value)

if (diag >= 0 )

{

// Diagonal element exists

using std::abs;

rtemp = abs(lu_col_ptr[diag]);

if (rtemp != RealScalar(0.0) && rtemp >= thresh) pivptr = diag;

}

pivrow = lsub_ptr[pivptr];

}

// Record pivot row

perm_r(pivrow) = StorageIndex(jcol);

// Interchange row subscripts

if (pivptr != nsupc )

{

std::swap( lsub_ptr[pivptr], lsub_ptr[nsupc] );

// Interchange numerical values as well, for the two rows in the whole snode

// such that L is indexed the same way as A

for (icol = 0; icol <= nsupc; icol++)

{

itemp = pivptr + icol * lda;

std::swap(lu_sup_ptr[itemp], lu_sup_ptr[nsupc + icol * lda]);

}

}

// cdiv operations

Scalar temp = Scalar(1.0) / lu_col_ptr[nsupc];

for (k = nsupc+1; k < nsupr; k++)

lu_col_ptr[k] *= temp;

return 0;

}

| void Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::pruneL | ( | const Index | jcol, |

| const IndexVector & | perm_r, | ||

| const Index | pivrow, | ||

| const Index | nseg, | ||

| const IndexVector & | segrep, | ||

| BlockIndexVector | repfnz, | ||

| IndexVector & | xprune, | ||

| GlobalLU_t & | glu | ||

| ) | [protected] |

Prunes the L-structure.

It prunes the L-structure of supernodes whose L-structure contains the current pivot row "pivrow"

| jcol | The current column of L | |

| [in] | perm_r | Row permutation |

| [out] | pivrow | The pivot row |

| nseg | Number of segments | |

| segrep | ||

| repfnz | ||

| [out] | xprune | |

| glu | Global LU data |

Definition at line 53 of file SparseLU_pruneL.h.

{

// For each supernode-rep irep in U(*,j]

Index jsupno = glu.supno(jcol);

Index i,irep,irep1;

bool movnum, do_prune = false;

Index kmin = 0, kmax = 0, minloc, maxloc,krow;

for (i = 0; i < nseg; i++)

{

irep = segrep(i);

irep1 = irep + 1;

do_prune = false;

// Don't prune with a zero U-segment

if (repfnz(irep) == emptyIdxLU) continue;

// If a snode overlaps with the next panel, then the U-segment

// is fragmented into two parts -- irep and irep1. We should let

// pruning occur at the rep-column in irep1s snode.

if (glu.supno(irep) == glu.supno(irep1) ) continue; // don't prune

// If it has not been pruned & it has a nonz in row L(pivrow,i)

if (glu.supno(irep) != jsupno )

{

if ( xprune (irep) >= glu.xlsub(irep1) )

{

kmin = glu.xlsub(irep);

kmax = glu.xlsub(irep1) - 1;

for (krow = kmin; krow <= kmax; krow++)

{

if (glu.lsub(krow) == pivrow)

{

do_prune = true;

break;

}

}

}

if (do_prune)

{

// do a quicksort-type partition

// movnum=true means that the num values have to be exchanged

movnum = false;

if (irep == glu.xsup(glu.supno(irep)) ) // Snode of size 1

movnum = true;

while (kmin <= kmax)

{

if (perm_r(glu.lsub(kmax)) == emptyIdxLU)

kmax--;

else if ( perm_r(glu.lsub(kmin)) != emptyIdxLU)

kmin++;

else

{

// kmin below pivrow (not yet pivoted), and kmax

// above pivrow: interchange the two suscripts

std::swap(glu.lsub(kmin), glu.lsub(kmax));

// If the supernode has only one column, then we

// only keep one set of subscripts. For any subscript

// intercnahge performed, similar interchange must be

// done on the numerical values.

if (movnum)

{

minloc = glu.xlusup(irep) + ( kmin - glu.xlsub(irep) );

maxloc = glu.xlusup(irep) + ( kmax - glu.xlsub(irep) );

std::swap(glu.lusup(minloc), glu.lusup(maxloc));

}

kmin++;

kmax--;

}

} // end while

xprune(irep) = StorageIndex(kmin); //Pruning

} // end if do_prune

} // end pruning

} // End for each U-segment

}

| void Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::relax_snode | ( | const Index | n, |

| IndexVector & | et, | ||

| const Index | relax_columns, | ||

| IndexVector & | descendants, | ||

| IndexVector & | relax_end | ||

| ) | [protected] |

Identify the initial relaxed supernodes.

This routine is applied to a column elimination tree. It assumes that the matrix has been reordered according to the postorder of the etree

| n | the number of columns |

| et | elimination tree |

| relax_columns | Maximum number of columns allowed in a relaxed snode |

| descendants | Number of descendants of each node in the etree |

| relax_end | last column in a supernode |

Definition at line 47 of file SparseLU_relax_snode.h.

{

// compute the number of descendants of each node in the etree

Index parent;

relax_end.setConstant(emptyIdxLU);

descendants.setZero();

for (Index j = 0; j < n; j++)

{

parent = et(j);

if (parent != n) // not the dummy root

descendants(parent) += descendants(j) + 1;

}

// Identify the relaxed supernodes by postorder traversal of the etree

Index snode_start; // beginning of a snode

for (Index j = 0; j < n; )

{

parent = et(j);

snode_start = j;

while ( parent != n && descendants(parent) < relax_columns )

{

j = parent;

parent = et(j);

}

// Found a supernode in postordered etree, j is the last column

relax_end(snode_start) = StorageIndex(j); // Record last column

j++;

// Search for a new leaf

while (descendants(j) != 0 && j < n) j++;

} // End postorder traversal of the etree

}

| Index Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::snode_bmod | ( | const Index | jcol, |

| const Index | fsupc, | ||

| ScalarVector & | dense, | ||

| GlobalLU_t & | glu | ||

| ) | [protected] |

| Index Eigen::internal::SparseLUImpl< Scalar, StorageIndex >::snode_dfs | ( | const Index | jcol, |

| const Index | kcol, | ||

| const MatrixType & | mat, | ||

| IndexVector & | xprune, | ||

| IndexVector & | marker, | ||

| GlobalLU_t & | glu | ||

| ) | [protected] |

friend struct column_dfs_traits [friend] |

Definition at line 60 of file SparseLUImpl.h.